What is Kubernetes? And what is its architecture?

Containerization has cut the cord between software developers and the production environment. Not in the sense that you don't need a production system at all, but you don't have to worry about the specificity of the production environment.

The apps are now bundled with the dependencies that they need, in a lightweight container instead of a VM. That is great! However, it doesn't provide immunity from system failures, network failure or disk failures. For example, if the data center, where your servers are running, is under maintenance your application will go offline.

Kubernetes comes into picture to solve these problems. It takes the idea of containers and extends it to work across multiple compute nodes (which could be cloud hosted virtual machine or bare metal servers). The idea is to have a distributed system for containerized applications to run on.

Why Kubernetes?

Now, why would you need to have a distributed environment in the first place?

For multiple reasons, first and foremost is high availability. You want your e-commerce website to stay online 24/7, or you will lose business, use Kubernetes for that. Second is scalability, where you want to scale 'out'. Scaling out here involves adding more compute nodes to give your growing application more leg room to operate.

Design and Architecture

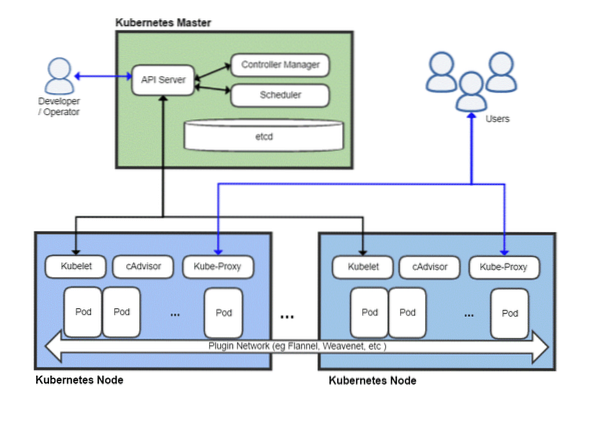

Like any distributed system, a Kubernetes cluster has a master node and then a whole lot of worker nodes which is where your applications would actually run. The master is responsible for scheduling tasks, managing workloads and securely adding new nodes to the cluster.

Now, of course, the master node itself can fail and take the whole cluster with it, so Kubernetes actually allows you to have multiple master nodes for redundancy's sake.

A bird's eye view of a typical Kubernetes deployment

Kubernetes Master

The Kubernetes master is what the DevOps team interact with and use for provisioning new nodes, deploying new apps and resource monitoring and management. The master node's most basic task is to schedule the workload efficiently amongst all the worker nodes to maximize resource utilization, improve performance and, follow various policies chosen by the DevOps team for their particular workload.

Another important component is the etcd which is a daemon that keeps track of worker nodes and keep a database storing the entire cluster's state. It is a key-value data store, which is can also run on a distributed environment across multiple master nodes. The content of etcd gives all the relevant data about the entire cluster. A worker node would look at the contents of etcd from time to time to determine how it should behave.

Controller is the entity that would take instructions from the API server (which we will cover later) and perform necessary actions like creation, deletion and updating applications and packages.

The API Server exposes the Kubernetes API, which uses JSON payloads over HTTPS, to communicate with the user interface that the developer teams or DevOps personnel would eventually end up interacting with. Both the web UI and the CLI consumes this API to interact with the Kubernetes cluster.

The API server is also responsible for the communication between the worker nodes and various master node components like etcd.

The Master node is never exposed to the end-user as it would risk the security of the entire cluster.

Kubernetes Nodes

A machine (physical or virtual) would need a few important components which once installed and set up properly can then turn that server into a member of your Kubernetes cluster.

First thing that you will need is a container runtime, like Docker, installed and running on it. It will be responsible for spinning up and managing containers, obviously.

Along with the Docker runtime, we also need the Kubelet daemon. It communicates with the master nodes, via the API server and queries the etcd, and gives back health and usage information about the pods that are running on that node.

However containers are pretty limited by themselves, so Kubernetes has a higher abstraction built on top of a collection of containers, known as Pods.

Why come up with pods?

Docker has a policy of running one application per container. Often described as the “one process per container” policy. This means if you need a WordPress site you are encouraged to have two containers one for the database to run on and another for the web server to run on. Bundling such related components of an application into a pod ensures that whenever you scale out, the two inter-dependent containers always coexist on the same node, and thus talk to each other quickly and easily.

Pods are the fundamental unit of deployment in Kubernetes. When you scale out, you add more pods to the cluster. Each pod is given its own unique IP address within the internal network of the cluster.

Back to the Kubernetes Node

Now a node can run multiple pods and there can be many such nodes. This is all fine until you think about trying to communicate with the external world. If you have a simple web-based service how would you point your domain name to this collection of pods with many IP addresses?

You can't, and you don't have to! Kube-proxy is the final piece of the puzzle that enables operators to expose certain pods out to the Internet. For example, your front-end can be made publically accessible and the kube-proxy would distribute the traffic amongst all the various pods that are responsible for hosting the front end. Your database however, need not be made public and kube-proxy would allow only internal communication for such back-end related workloads.

Do you need all of this?

If you are just starting out as a hobbyist or a student, using Kubernetes for a simple application would actually be inefficient. The entire rigmarole would consume more resources than your actual application and would add more confusion for a single individual.

However, if you are going to work with a large team and deploy your apps for serious commercial use, Kubernetes is worth the addition overhead. You can stop things from getting chaotic. Make room for maintenance without any downtime. Setup nifty A/B testing conditions and scale out gradually without spending too much on the infrastructure up-front.

Phenquestions

Phenquestions