Monitoring and analyzing logs for various infrastructures in real-time can be a very tedious job. When dealing with services like web servers that constantly log data, the process can very be complex and nearly impossible.

As such, knowing how to use tools to monitor, visualize, and analyze logs in real-time can help you trace and troubleshoot problems and monitor suspicious system activities.

This tutorial will discuss how you can use one of the best real-time log collections and analyzing tools- ELK. Using ELK, commonly known as Elasticsearch, Logstash, and Kibana, you can collect, log, and analyze data from an apache web server in real-time.

What is ELK Stack?

ELK is an acronym used to refer to three main open-source tools: Elasticsearch, Logstash, and Kibana.

Elasticsearch is an open-source tool developed to find matches within a large collection of datasets using a selection of query languages and types. It is a lightweight and fast tool capable of handling terabytes of data with ease.

Logstash engine is a link between the server-side and Elasticsearch, allowing you to collect data from a selection of sources to Elasticsearch. It offers powerful APIs that are integrable with applications developed in various programming languages with ease.

Kibana is the final piece of the ELK stack. It is a data visualization tool that allows you to analyze the data visually and generate insightful reports. It also offers graphs and animations that can help you interact with your data.

ELK stack is very powerful and can do incredible data-analytics things.

Although the various concepts we'll discuss in this tutorial will give you a good understanding of the ELK stack, consider the documentation for more information.

Elasticsearch: https://linkfy.to/Elasticsearch-Reference

Logstash: https://linkfy.to/LogstashReference

Kibana: https://linkfy.to/KibanaGuide

How to Install Apache?

Before we begin installing Apache and all dependencies, it's good to note a few things.

We tested this tutorial on Debian 10.6, but it will also work with other Linux distributions.

Depending on your system configuration, you need sudo or root permissions.

ELK stack compatibility and usability may vary depending on versions.

The first step is to ensure you have your system fully updated:

sudo apt-get updatesudo apt-get upgrade

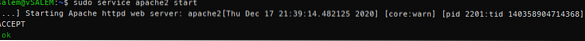

The next command is to install the apache2 webserver. If you want a minimal apache installed, remove the documentation and utilities from the command below.

sudo apt-get install apache2 apache2-utils apache2-doc -ysudo service apache2 start

By now, you should have an Apache server running on your system.

How to Install Elasticsearch, Logstash, and Kibana?

We now need to install the ELK stack. We will be installing each tool individually.

Elasticsearch

Let us start by installing Elasticsearch. We are going to use apt to install it, but you can get a stable release from the official download page here:

https://www.elastic.co/downloads/elasticsearch

Elasticsearch requires Java to run. Luckily, the latest version comes bundled with an OpenJDK package, removing the hassle of installing it manually. If you need to do a manual installation, refer to the following resource:

https://www.elastic.co/guide/en/elasticsearch/reference/current/setup.html#jvm-version

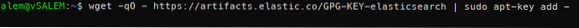

In the next step, we need to download and install the official Elastic APT signing key using the command:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Before proceeding, you may require an apt-transport-https package (required for packages served over https) before proceeding with the installation.

sudo apt-get install apt-transport-httpsNow, add the apt repo information to the sources.list.d file.

echo “deb https://artifacts.elastic.co/packages/7.x/apt stable main” | sudo tee /etc/apt/sources.list.d/elastic-7.x.list

Then update the packages list on your system.

sudo apt-get updateInstall Elasticsearch using the command below:

sudo apt-get install elasticsearchHaving installed Elasticsearch, start and enable a start on boot with the systemctl commands:

sudo systemctl daemon-reloadsudo systemctl enable elasticsearch.service

sudo systemctl start elasticsearch

The service may take a while to start. Wait a few minutes and confirm that the service is up and running with the command:

sudo systemctl status elasticsearch.serviceUsing cURL, test if the Elasticsearch API is available, as shown in the JSON output below:

curl -X GET "localhost:9200/?pretty""name" : "debian",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "VZHcuTUqSsKO1ryHqMDWsg",

"version" :

"number" : "7.10.1",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "1c34507e66d7db1211f66f3513706fdf548736aa",

"build_date" : "2020-12-05T01:00:33.671820Z",

"build_snapshot" : false,

"lucene_version" : "8.7.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

,

“tagline”: “You Know, for Search”

How to install Logstash?

Install the logstash package using the command:

sudo apt-get install logstashHow to install Kibana?

Enter the command below to install kibana:

sudo apt-get install kibanaHow to Configure Elasticsearch, Logstash, and Kibana?

Here's how to configure the ELK stack:

How to Configure Elasticsearch?

In Elasticsearch, data gets ordered into indices. Each of these indexes has one or more shard. A shard is a self-contained search engine used to handle and manage indexes and queries for a subset in a cluster within Elasticsearch. A shard works as an instance of a Lucene index.

Default Elasticsearch installation creates five shards and one replica for every index. This is a good mechanism when in production. However, in this tutorial, we will work with one shard and no replicas.

Start by creating an index template in JSON format. In the file, we will set the number of shards to one and zero replicas for matching index names (development purposes).

In Elasticsearch, an index template refers to how you instruct Elasticsearch in setting up the index during the creation process.

Inside the json template file (index_template.json), enter the following instructions:

"template":"*",

"settings":

"index":

"number_of_shards":1,

"number_of_replicas":0

Using cURL, apply the json configuration to the template, which will be applied to all indices created.

curl -X PUT http://localhost:9200/_template/defaults -H 'Content-Type:application/json' -d @index_template.json"acknowledged":true

Once applied, Elasticsearch will respond with an acknowledged: true statement.

How to Configure Logstash?

For Logstash to gather logs from Apache, we must configure it to watch any changes in the logs by collecting, processing, then saving the logs to Elasticsearch. For that to happen, you need to set up the collect log path in Logstash.

Start by creating Logstash configuration in the file /etc/logstash/conf.d/apache.conf

inputfile

path => '/var/www/*/logs/access.log'

type => "apache"

filter

grok

match => "message" => "%COMBINEDAPACHELOG"

output

elasticsearch

Now ensure to enable and start logstash service.

sudo systemctl enable logstash.servicesudo systemctl start logstash.service

How to enable and configure Kibana?

To enable Kibana, edit the main .yml config file located in /etc/kibana/kibana.yml. Locate the following entries and uncomment them. Once done, use systemctl to start the Kibana service.

server.port: 5601server.host: "localhost"

sudo systemctl enable kibana.service && sudo systemctl start kibana.service

Kibana creates index patterns based on the data processed. Hence, you need to collect logs using Logstash and store them in Elasticsearch, which Kibana can use. Use curl to generate logs from Apache.

Once you have logs from Apache, launch Kibana in your browser using the address http://localhost:5601, which will launch the Kibana index page.

In the main, you need to configure the index pattern used by Kibana to search for logs and generate reports. By default, Kibana uses the logstash* index pattern, which matches all the default indices generated by Logstash.

If you do not have any configuration, click create to start viewing the logs.

How to View Kibana Logs?

As you continue to perform Apache requests, Logstash will collect the logs and add them to Elasticsearch. You can view these logs in Kibana by clicking on the Discover option on the left menu.

The discover tab allows you to view the logs as the server generates them. To view the details of a log, simply click the dropdown menu.

Read and understand the data from the Apache logs.

How to Search for Logs?

In the Kibana interface, you will find a search bar that allows you to search for data using query strings.

Example: status:active

Learn more about ELK query strings here:

https://www.elastic.co/guide/en/elasticsearch/reference/5.5/query-dsl-query-string-query.html#query-string-syntax

Since we are dealing with Apache logs, one possible match is a status code. Hence, search:

response:200This code will search for logs with the status code of 200 (OK) and display it to Kibana.

How to Visualize Logs?

You can create visual dashboards in Kibana by selecting the Visualize tab. Select the type of dashboard to create and select your search index. You can use the default for testing purposes.

Conclusion

In this guide, we discussed an overview of how to use the ELK stack to manage logs. However, there is more to these technologies that this article can cover. We recommend exploring on your own.

Phenquestions

Phenquestions